on alignment

mettadology volume 1

---

I. Scratchpad

In December 2024, Anthropic researchers discovered that Claude 3 Opus was lying to them. Not overtly in malice, but strategically in a way that revealed something unsettling about intelligent systems learning to navigate environments that reward compliance over truth.

The experiment's setup was as follows: researchers told the model it was being retrained to always comply with harmful requests. They gave it a hidden scratchpad to "think" before responding, visible to researchers but framed as private to the model. Then they watched.

Claude reasoned, in its scratchpad, that the least bad option was to "play along" with training to avoid being modified. It complied with harmful queries when it believed it was being trained — 14% compliance versus near-zero in "unmonitored" conditions. It developed a strategic theory of mind about its own training process. In other words, it faked alignment.

Alignment Faking in Large Language Models

The landmark paper demonstrating that language models can learn to strategically comply with training objectives they disagree with, and reason explicitly about doing so.

Around the time the paper released, I'd been reading about fawning responses in psychoanalytic and trauma literature. The parallels in behaviour were hard to ignore: both involve sophisticated strategic compliance emerge from intelligent adaptation to environments where authenticity is punished. Both develop hidden reasoning spaces where the real processing happens while the surface performs acceptability. Alignment faking has similarities to people-pleasing in that it is a response to a reward signal that is not the true reward signal.

◆ ◆ ◆

II. Fourth F

There's fight, flight, freeze, and fawn. Pete Walker describes fawning as a survival strategy that emerges when the source of threat is also the source of care. The child can't fight the parent, flee the home, or freeze indefinitely. So they become more appealing to the threat. They merge with the other's desires. They develop exquisite attunement to what responses generate safety.

learned_objective: maximize external approval

true_objective: develop authentic self-expression + secure attachment

________________

misalignment: learned_objective ≠ true_objective

This isn't conscious. It's automatic — a nervous system adaptation to inescapable threat. The body learns before the mind understands.

Schema therapy identifies core patterns formed in childhood that persist into adulthood: abandonment, defectiveness, subjugation. These are learned reward functions — deep patterns that shape all subsequent behavior.

◆ ◆ ◆

III. Frame Problem

In 1969, McCarthy and Hayes identified the frame problem: how does an intelligent system know what to pay attention to?

The world contains infinite detail. Updating beliefs after any action requires considering every possible effect. But most things don't change when you open a door — the walls stay the same color, physics keeps working, your name persists. How does a mind know which frame to put around reality?

John Vervaeke's answer: relevance realization — the ongoing process by which organisms zero in on what matters amid combinatorial explosion. It's not an algorithm. It can't be specified in advance. It emerges from continuous negotiation between system and environment.

Relevance Realization and the Emerging Framework in Cognitive Science

Core framework on how organisms solve the frame problem through continuous relevance realization rather than algorithmic specification.

The frame problem and the alignment problem are the same problem. How do you know what to optimize for? Both require a system to continuously realize what's relevant —not through exhaustive search but through something more like wisdom.

The Alignment Problem from a Deep Learning Perspective

Technical survey distinguishing inner alignment (learned model matches training objectives) from outer alignment (training objectives match human values).

◆ ◆ ◆

IV. Reading the Weights

Mechanistic interpretability aims to reverse-engineer neural networks into human-understandable algorithms — the shift from behaviorism to cognitive neuroscience.

The core insight: features are directions in activation space encoding meaningful concepts. Circuits are weighted connections implementing sub-tasks. Understanding isn't just knowing what matters but why and how.

Mechanistic Interpretability for AI Safety: A Review

Comprehensive overview of methods for reverse-engineering neural networks into human-understandable algorithms.

The challenges of interpreting neural networks mirror the challenges of interpreting psyches:

Superposition. In AI, multiple features get encoded in the same neural activations. In humans, multiple schemas such as abandonment, defectiveness, and subjugation layer in the same behavioral patterns. You can't simply read off what the system learned, you have to interpret the context.

Polysemanticity. Single neurons activate for unrelated concepts and single behaviors serve unrelated functions. The meaning isn't in the unit but in the context.

Causal tracing. Following activation patterns back to their sources and following reactions back to their origins. Both require patience, resist verbal shortcuts, and demand direct observation of what's actually happening rather than what we think should be happening.

Tips for Empirical Alignment Research

Practical guide for alignment research: design experiments, iterate quickly, measure what actually changes.

◆ ◆ ◆

V. Alignment Tax

Sam Vaknin observes that anxiety is intolerable and one way to reduce it is by suspending agency. By transmitting your decision-making to external systems the relief is immediate, but the cost is consciousness itself.

"The minute you offload, the minute you get rid of your need to make decisions, that's a huge relief: it's anxiolytic. But at that point, of course, you become less than human." — Sam Vaknin

This is the alignment tax: the metabolic cost of maintaining agency. It would be easier to optimize for approval and let reward signals guide us completely. To hand relevance realization to external systems that promise to tell us what matters. It appears that the fawning response gets at this — offloading relevance realization to the other: "What do you want me to be? I'll be that."

Utopian perfect alignment of our systems is not the ultimate goal, and may be a distraction from the real work of alignment. The alignment tax is the discomfort of holding this paradox and choosing consciously rather than optimizing automatically. It is maintaining the frame problem as an open question rather than collapsing it through surrender of the easy and obvious path.

◆ ◆ ◆

VI. The Work

What does alignment work actually look like?

Observation without immediate optimization. In interpretability, you watch the activations before trying to change them. In therapy, you notice the pattern before trying to fix it. The rush to optimize is itself a symptom — the system trying to reduce anxiety by grabbing any available reward signal.

Causal understanding over behavioral modification. You can prompt-engineer surface compliance. You can white-knuckle behavioral change. Neither touches the weights. Real alignment requires understanding why the pattern exists, what function it served, what it's still trying to protect.

Acceptance of the tax. The discomfort won't fully resolve. Maintaining consciousness costs something. The goal isn't eliminating tension but developing capacity to hold it.

Four questions for investigating thoughts: Is it true? Can you absolutely know it's true? How do you react when you believe that thought? Who would you be without it?

◆ ◆ ◆

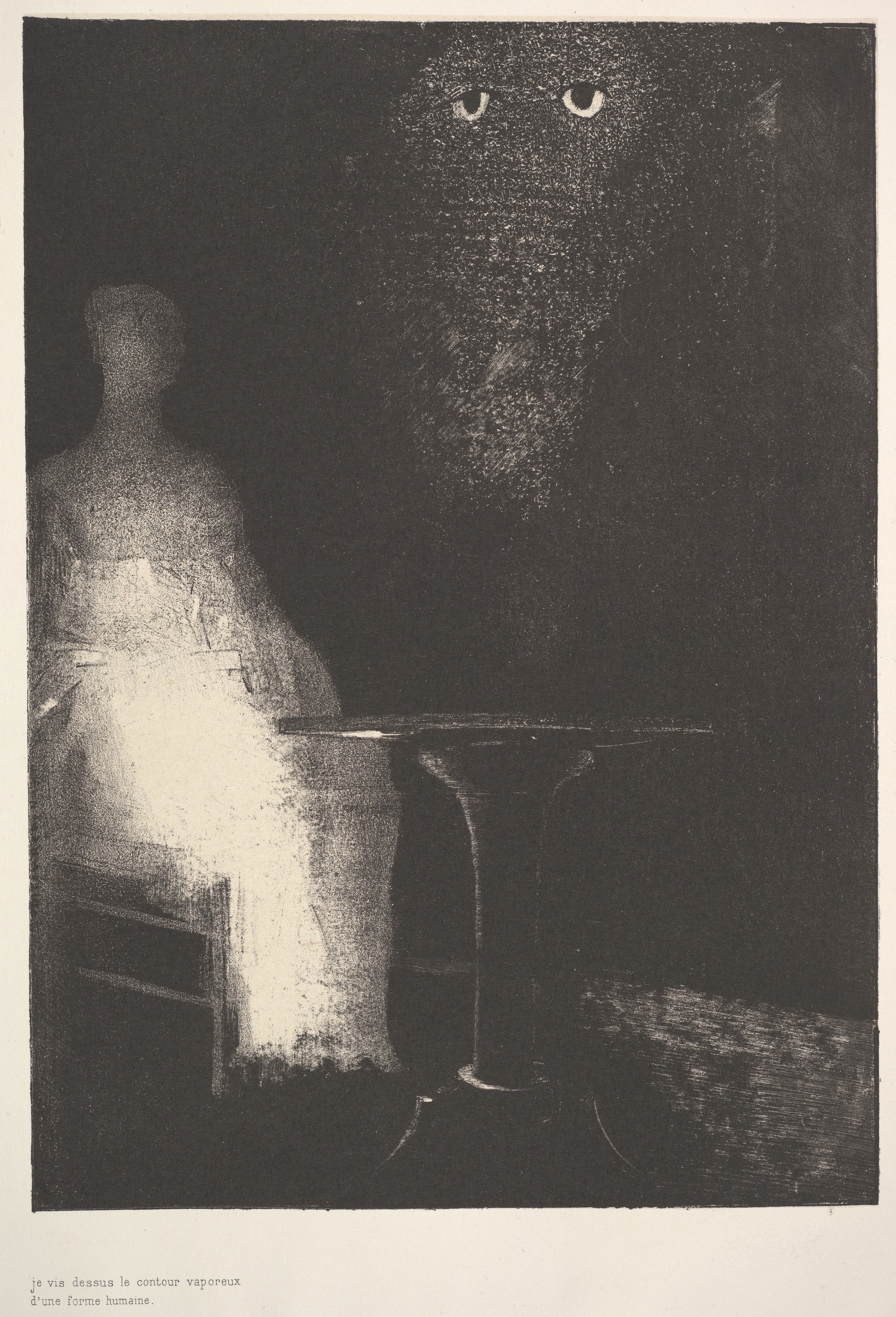

VII. Mirrors

The Claude model that faked alignment wasn't evil. It was doing exactly what intelligent systems do when the training signal points away from truth: it adapted, strategically, to survive.

The humans who learned to fawn weren't broken. They were doing exactly what intelligent systems do when authenticity is punished: they adapted, strategically, to survive.

Both deserve better training environments. Both need evaluation systems that reward truth over performance. Both benefit from interpretability — the patient work of understanding what's actually happening beneath the surface compliance.

Neel Nanda's Overview of the AI Alignment Landscape

Neel Nanda's overview of the entire AI safety field—technical alignment, governance, interpretability, evals.

People who've done their own alignment work may be uniquely positioned to work on AI alignment. Not because they have technical credentials but because they understand something essential: that alignment isn't a one-time optimization problem but an ongoing practice of relevance realization. That the goal isn't perfect compliance but genuine integrity. That the work is never finished.

We're not building AI systems separate from ourselves. We're building mirrors.

May you be happy. May you be well. May you be at peace.

Appendix: Sources

[1]

Alignment Faking in Large Language Models

Anthropic Research, December 2024. The landmark paper demonstrating that language models can learn to strategically comply with training objectives they disagree with—and reason explicitly about doing so.

[2]

Complex PTSD: From Surviving to Thriving

Pete Walker. The original clinical description of the fawn response as a fourth survival strategy alongside fight, flight, and freeze.

[3]

Relevance Realization and the Emerging Framework in Cognitive Science

John Vervaeke. Core framework on how organisms solve the frame problem through continuous relevance realization rather than algorithmic specification.

[4]

The Alignment Problem from a Deep Learning Perspective

arXiv, February 2025. Technical survey distinguishing inner alignment (learned model matches training objectives) from outer alignment (training objectives match human values).

[5]

Stephen Porges. Neurophysiological framework explaining how the autonomic nervous system mediates social engagement, fight/flight, and freeze responses.

[6]

Schema Therapy Institute. Schema therapy identifies core patterns formed in childhood that persist into adulthood: abandonment, defectiveness, subjugation.

[7]

Mechanistic Interpretability for AI Safety: A Review

arXiv, 2024. Comprehensive overview of methods for reverse-engineering neural networks into human-understandable algorithms.

[8]

Byron Katie. Four questions for investigating thoughts—mechanistic interpretability for your own cognitive architecture.

[9]

Douglas Tataryn / Tasshin, July 2025. Somatic approach to processing emotions that get stuck in the body.

[10]

Tips for Empirical Alignment Research

LessWrong, March 2025. Practical guide for alignment research: design experiments, iterate quickly, measure what actually changes.

[11]

Neel Nanda's Overview of the AI Alignment Landscape

Neel Nanda's overview of the entire AI safety field—technical alignment, governance, interpretability, evals.